urban teaching

urban teaching

As I have stated elsewhere in these essays, I have tried to understand why my students’ abilities to learn and retain the material I was teaching were below my expectations. Several times I gave exams in which I attempted to measure explicitly their comprehension of questions and problem statements. I reported on one early experiment in the essay What Am I Supposed to Do?

I wrote the essay below before I had come up with the thesis of The Classroom Language Hypothesis, and I present it here as originally written. The analysis I report below was a major input into the formation of the Hypothesis.

In the experiment I report here, I showed students Geometry problems from worksheets that had already been assigned and which we had discussed in class, and I asked questions, not about the Geometry problems but about the problem statements (i.e., the worksheet questions): what are these questions asking you to do? In this way I sought to measure the students’ comprehension of the problem statements. I attempted to design my questions in such a way that a person who read and comprehended English well but had no knowledge of Geometry content could answer the questions correctly by studying the wording of the problems statements.

I built each question to look like an MCAS multiple-choice question, whose style was familiar to the students. At the beginning of the test I told the students in more than one way that the geometry problem statement in each box was from a worksheet they had been assigned, and that they were not to try to solve that problem but to answer the multiple-choice question right below the box.

As an example, between these lines is question 7 of the 10-question test.

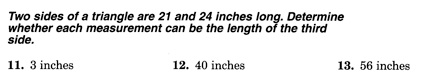

Multiple-choice question 7 refers to this problem statement:

______7. What can a correct answer to each of questions 11, 12, and 13 contain?

A. A length in inches.

B. Two lengths in inches.

C. The word “yes” or the word “no.”

D. The word “yes” or the word “no,” and a length in inches.

The correct answer is “C.” The percentages the four choices received were as follows:

A. 33%

B. 14%

C. 25% (The correct answer)

D. 27%

(Note that if all the students made their choices randomly, the expected percentages would be 25% for each of the four choices.) Assuming, as I am, that the students were being earnest and not perverse, and believing, as I do, that there is something that needs to be understood behind these results, one is forced to ask: What is operating here?

The Whole Test

You can see the whole 10-question test in pdf form by clicking here. (I encourage my readers to study the test, perhaps give it to a few people, and reply whether you believe it is fair and/or whether you think it is usefully measuring anything interesting.) After handing out the test I asked the students to read the instructions at the top of the first page as I read them aloud, with the emphases shown, and even with some additional interpolated explanations.

On average, 40% of the questions were answered correctly in a population of 51 students. (Given a few reasonable assumptions, a little analysis shows that of this 40% of the questions answered correctly, half were answered correctly by students who understood the question and the other half were lucky guesses.) I wrote a paper describing the results and presented it to the faculty of the math department. The general reactions of the teachers were (1) to see the results documented this way was shocking, but (2) these results were consistent with their (the teachers’) own experiences. You can see my report in pdf form, together with the original test (annotated with the answer statistics) as an appendix, by clicking here.

Designing an effective assessment instrument is not easy and cannot be done well without extensive experimental evaluation. Therefore my results should not be seen as having precision; nevertheless they raise puzzling questions. The following discussion of what can go wrong in the design of question 7, for example, will throw some light on the context in which I gave the test.

What Can Go Wrong With Question 7?

There are many ways a reader can go astray trying to answer question 7. Several sophisticated, literate adults have gotten it wrong. Here are some issues with question 7 that occur to me.

1.The language of the worksheet problem statement in the black box is obscure.

2.The language of the worksheet problem statement is at too high a level for the reader.

3.The language of my multiple-choice question 7 is obscure.

4.The language of my question 7 is at too high a level for the reader.

5.The reader is not trained to distinguish, or is not capable of distinguishing, the separate natures of the Geometry problem in the box and the question about the Geometry problem immediately below the box. (A logician might call these the question and the meta-question.) Is making this distinction too much to ask of a high-school student?

6.The reader, not having comprehended the original instructions not to try to solve the geometry problems, finds the whole affair too confusing.

I suspect that each of these issues is present in varying degrees with different students. Here are concrete examples of these issues.

Issue 1. It may not be clear that there are three separate geometry problems in the box, numbered 11, 12, and 13. This information is carried by one word: each. At what point in the student’s development of reading comprehension does an understanding of this usage of “each” occur?

Issue 2. “Determine whether” is definitely a problem, and it appears multiple times in the workbook. I established earlier in the year that almost nobody in my classes knew what it meant. I actually gave a lecture (well before I gave this test) in which I wrote on the board in front of every class and repeated with examples:

“Determine whether” means “answer yes or no.”

Given that fact, I propose a seventh issue, which is general, not specific to this test question: Issue 7. Many of my students do not retain the material that is put before them (and that they are expected to study) to a degree a high-school math teacher can reasonably expect. (I know from multiple experiences that this statement is true. For example, two years in a row I had students asking me the week before the final exam the meaning of the upside-down-T “perpendicular” symbol; it was introduced the first month of the school year and used hundreds of times throughout the year.)

Issues 3 and 4. “What can a correct answer contain?” might be obscure. (If so, the whole test is worth little.) I was hoping, in part, that the four choices I presented to each question would make specific the meaning of this question. Also, in question 7 I attempted to clarify the meaning of “each” by being explicit in my wording of the question.

Issue 5. (If it is not reasonable to ask a meta-question then I probably can’t use a test like this to try to understand my students’ low comprehension; indeed, is it possible to teach high-school Geometry to someone who does not understand this distinction?)

Issue 6. The fact that some students tried to solve the Geometry problems tells me that this issue is applicable in some cases. Clearly one must take a different approach with these students.

Lessons

Creating problems and test questions that do not unfairly penalize students depending on their language abilities is very hard. I know from my classroom experience that the creators of the textbook system we used did not succeed very well at it. (Added later: I see now that part of our problem is insufficient understanding of the structure of what we call “language abilities;” the problem is much more than inadequate vocabulary.) That lesson then leads to the question of textbook adoption and curriculum planning. I was not involved in the selection of our text, but I strongly suspect that considerations such as I have raised here were not given much weight. Given that a large fraction of our students do not hear much English at home, I have come to believe that these considerations are crucial to the success of our students, yet they play a very small role in our approach to the way we present the material.

Another observation: you will see that the Geometry problem statement in the box on page 1 of the test presents a decoding problem that will challenge many native speakers. When I had discussed the solution to this problem earlier in the year I found it necessary to teach my students how to parse the sentence; this was a skill that was absent in my classes.

A great many of my students could converse in a way that was indistinguishable from their primarily English-speaking peers. Yet I learned from extended contact with many of them that their vocabularies were very shallow. The introduction of one important word that is not part of a student’s normal vocabulary can shut down his or her comprehension for a while. We as a faculty have tried to address this problem, but I was not seeing results. I conclude that the traditional literary approaches to increasing comprehension that we have been using have not been sufficiently helpful to students who have to take the kind of course I was teaching.

© Copyright 2008 Mel Conway PhD

A Comprehension Experiment

Wednesday, February 27, 2008